A retail giant’s development ops needed an easier way to evaluate and store test artifacts.

COLLABORATOR

The Home Depot

SKILLS

Contextual Interviews, User Journeys, Whiteboarding, Process Flows, Wireframing, Mockups, Prototyping

Team

Jess Lewis, Amber Hansford, Jason Rowe, Chris Gruel, Chris Elder, Elan Grossman, Yasmin Abdhulla, Mone’t Fulgham

―

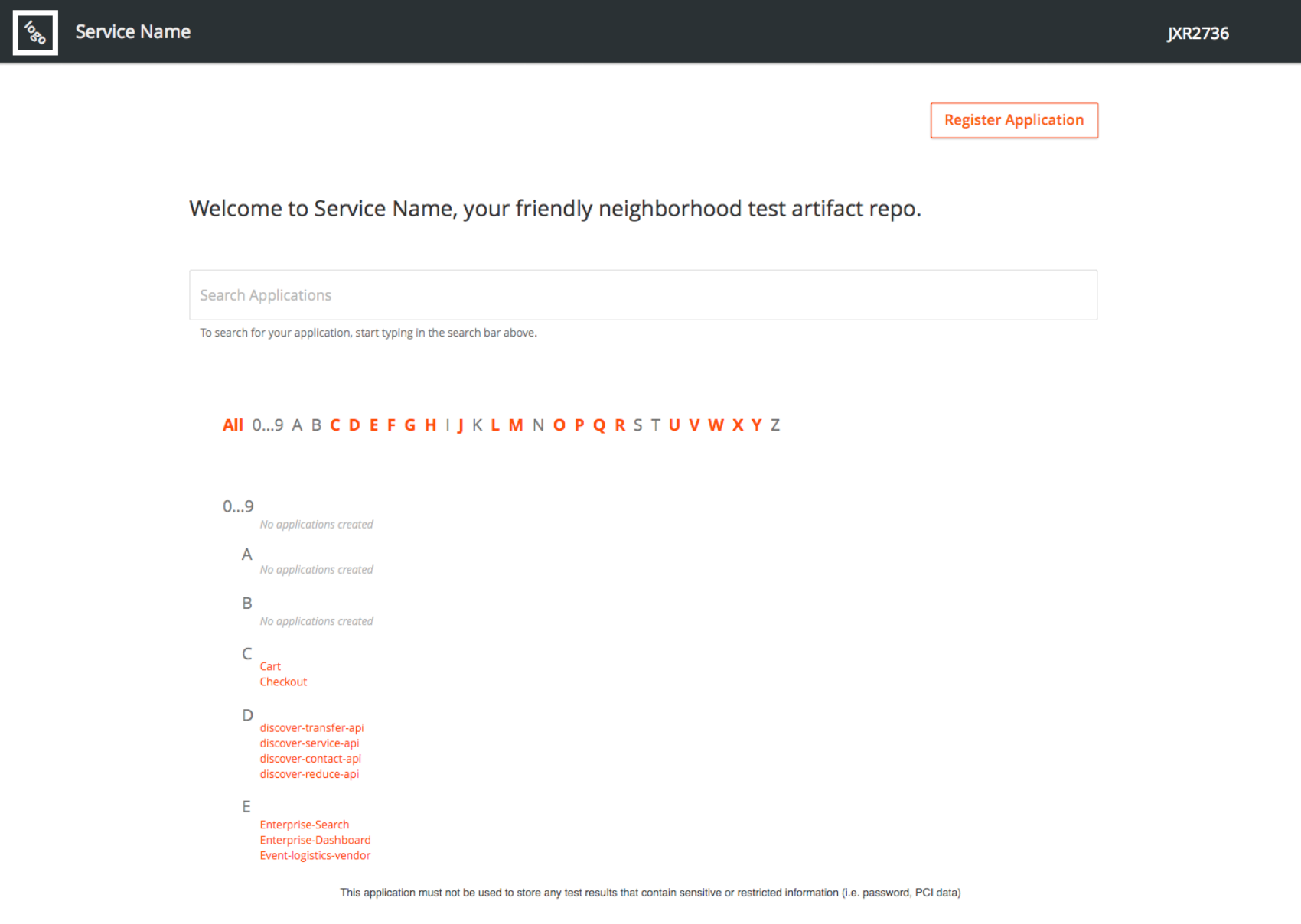

QualityHub is an internal product, used only by teammates within The Home Depot. They use it to store developers’ test artifacts, meeting federal compliance for code audits.

Home Depot developers and auditors hate code audits, because it takes too much time.

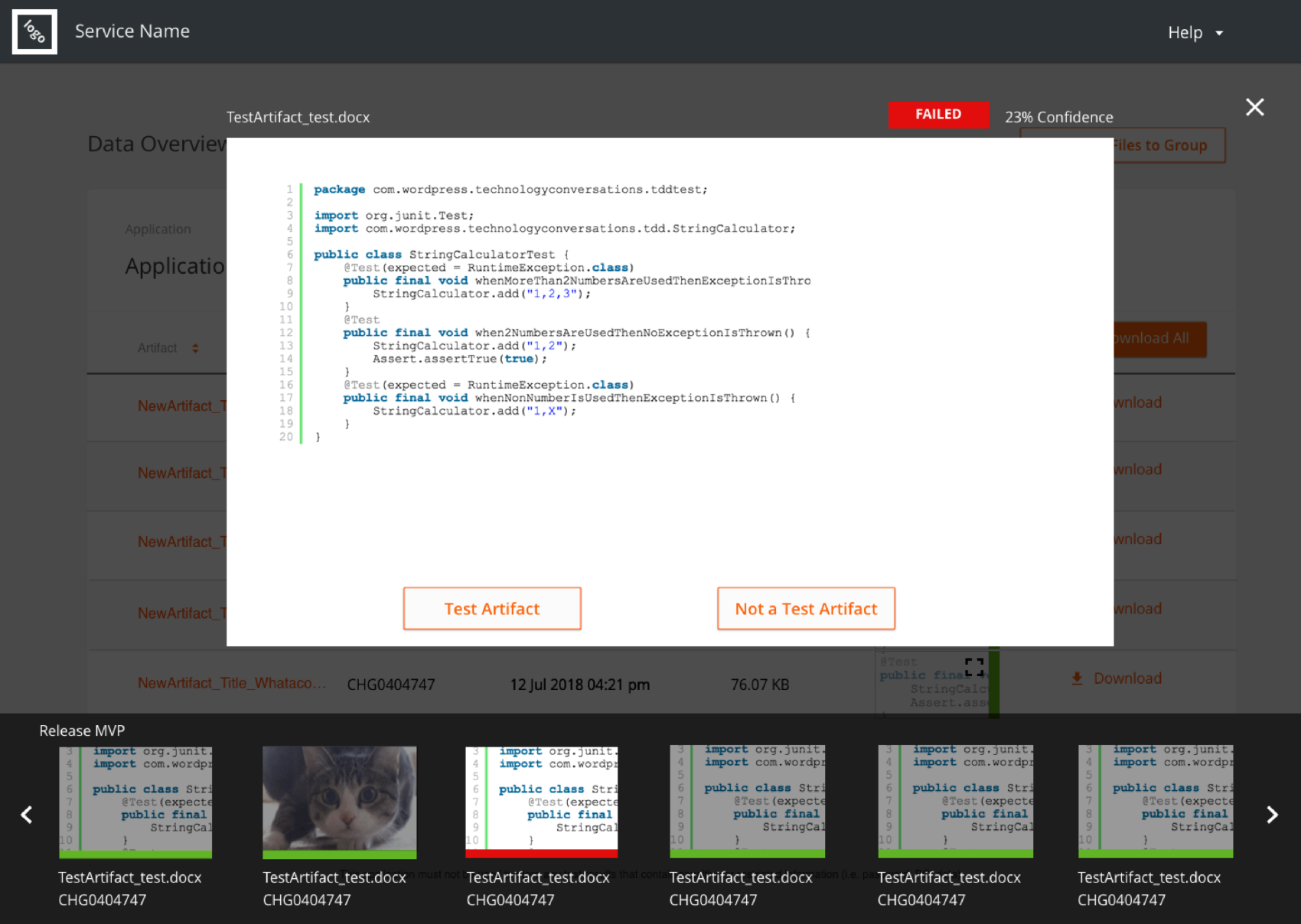

So, we used machine learning to pre-evaluate those artifacts.

What we made together:

How we did it:

1.

Design evaluation. Team huddle.

Received handoffs from previous designer and evaluated current state, finding some questionable modal usage. Worked with an engineer to create a low-resource solve to the modal issues without impacting delivery.

Talked with teammates to evaluate their thoughts on the product and its direction, then worked with Product Manager to define product, UX, and research needs.

Together, we evaluated timeline to MVP & defined a research and design strategy. As we defined this, I learned about the machine learning ethics space and used this to influence design strategy.

Prior designs

immediate improvements

2.

ML architecture. Process definition. Initial design concept.

Defined machine learning database structure with senior engineers. Define product strategy with product manager, working together to prioritize features based on that strategy.

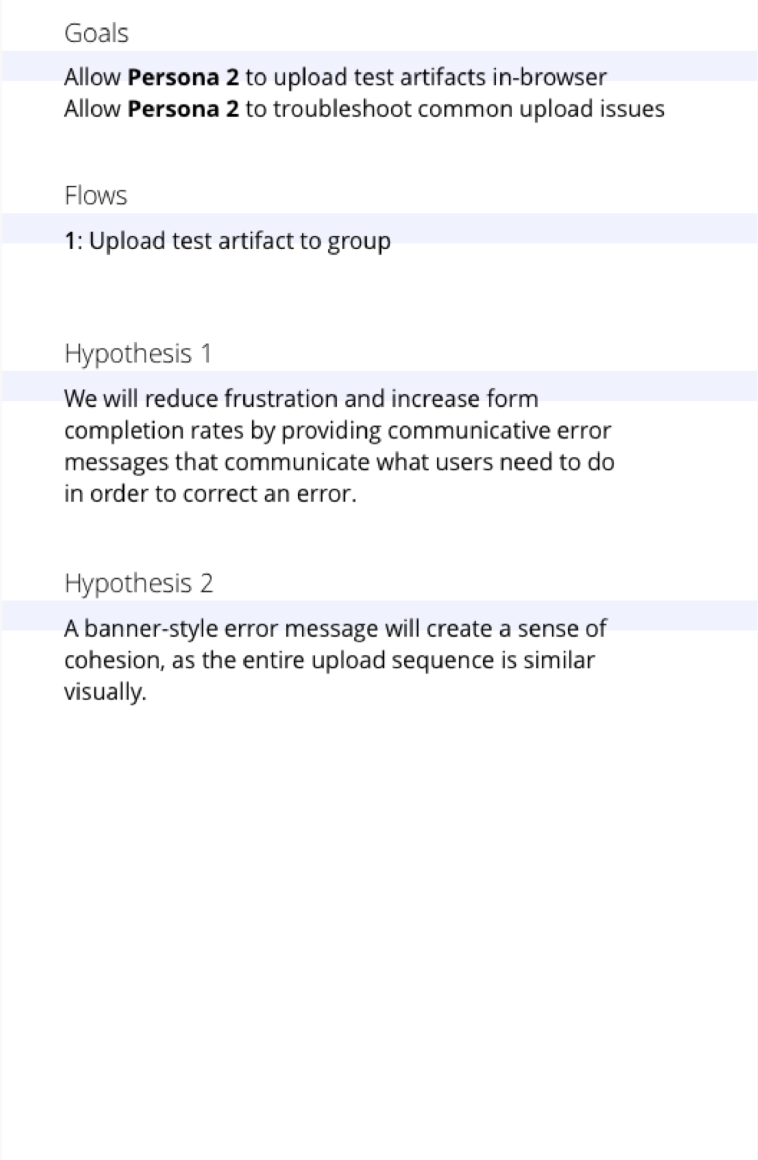

I came up with a hypothesis-based design process that aimed to create a solid basis for success metrics using post-launch qualitative research:

personas impacted

user flows impacted

hypothesis of intended impact on each impacted persona and flow

Sharing an initial design concept with the team allowed for a fruitful set of discussions that brought to light each teammate’s thoughts about user impact and feature prioritization. Most importantly, it brought up a dearth of burning questions.

Process design

initial design concept

3.

User interviews.

Based on discussions around the initial design, I conducted contextual user interviews with 4 development managers and 5 developers that answered the team’s burning questions.

Feature definition and strategy. Design iteration approach.

Based on teammate and user feedback, I worked alongside the Product Manager and leadership to define a scaffolded approach to defining features.

We defined a far-flung vision, then created logical scaffolds to that vision. This approach reduced development re-work and created an aligned vision.

4.

Scaffold two | 12 months

Scaffold one | 6 months

mvp | 1 month

Continuous delivery.

I worked ahead of development by at least two months, balancing QAing ready-to-ship code, delivering iterations of in-development design, and ideating on future-facing iterations.

5.

Collaborate early and often. Especially with product. A close relationship with the product manager that veers collaborative will allow a cohesive vision to sing.

Demo the right fidelity at the right level of leadership. Allowing higher level individuals to align on a vision before anyone else sees it and gives input gives space for a clear, collaborative vision to emerge.

ML is very specific tool for very specific applications. Machine learning should be employed in very specific ways to do tasks that other algorithms, functions, and established conventions wouldn’t be able to do.

Data storage requires strategy. In order to store data in an ethical way that lowers data analysis cost, we have to work together to figure out what data is valuable to store where - especially golden data.